AWS says it charges nothing for data ingress, and it is telling the truth.

On the face of things, that statement is extremely uninteresting. How I went about validating it, however, might prove slightly more interesting to some folks.

How AWS data transfer pricing works

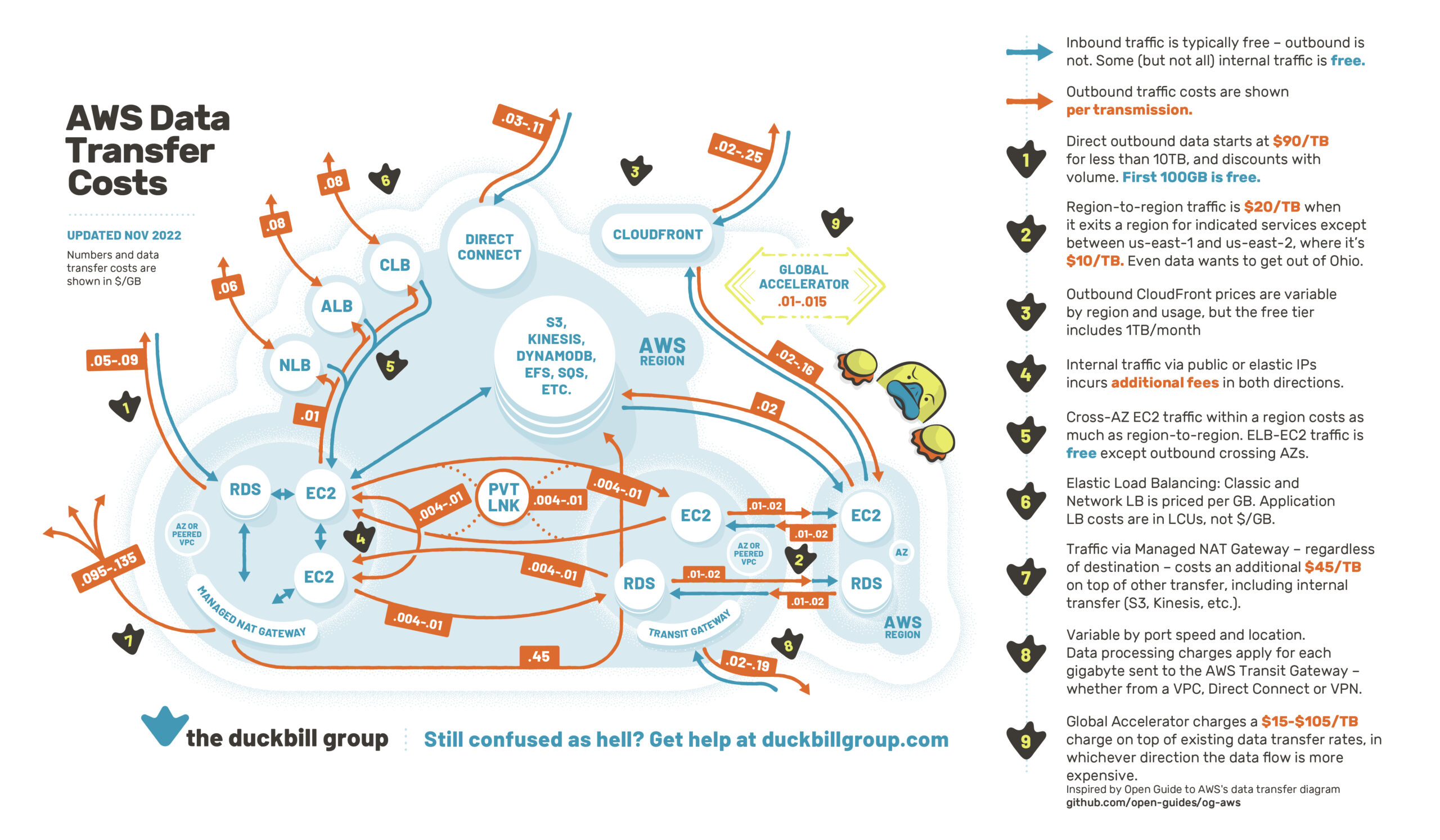

AWS’s data transfer pricing is so ludicrously complex that I had to build a diagram to understand it:

This generally distills to: Outbound traffic is an inscrutable-yet-probably-unreasonably-large amount of money, and inbound traffic is free. But I got to wondering just how “free” inbound traffic really is.

My question stems from the way Transmission Control Protocol (TCP) works. Every packet sent via TCP has the receiver send a packet acknowledging its receipt. Should that acknowledgement not arrive, the sender retransmits the packet.

Those acknowledgement packets, while small, are definitionally traffic that is egressing from AWS. Are customers charged for this? If so, this may add up to something significant at scale for some workloads. After all, every AWS customer effectively takes AWS on faith when it tells you how many giga-/tera-/peta-/exabytes of data transfer you had last month. Very few customers have an on-the-wire level of insight into their traffic volumes. Even the most aggrieved, bean-counting customer with a grudge against Amazon doesn’t conduct an internal audit of these things because they’re so hard to validate and verify.

Obviously, the solution here was for me to do an experiment or two so that other people wouldn’t have to test it themselves.

Experiment 1: Amazon S3 test

I started with something easy: S3. It’s a fully managed service, there was nothing for me to manage myself past the upload, and, as a result, there was nothing for me to misconfigure to screw a test up.

A few days before I ran the test, AWS helpfully spun up a new me-central-1 region in the United Arab Emirates. Since I knew I had nothing running in that region in one of my test accounts, anything that showed up in that region’s bill would be a result of my experiment.

I then used dd to generate 100 1-gigabyte files in a loop, filled with fresh emptiness from /dev/zero. I don’t know what Apple’s done with its filesystems lately, but this returned instantly, so I was convinced it had failed — but no! It worked! Nice job, Apple engineers.

I wrote a loop to iterate through those 100 files, generate a pre-signed URL for S3 for each one, and then use cURL to POST the file to S3. I wanted to avoid using higher level SDKs or the AWS CLI for the uploading because those things do what you would normally want them to do: They speed through large files via multipart upload. The problem was that they might enable compression for the transfer — and a big file completely full of zeros compresses super well. I also didn’t want it to retry on failed uploads; precision on these things is somewhat important!

I let the loop run to completion, verified that there weren’t any errors, then settled in to let the billing system reconcile what it thought it saw over a multi-day span. I set a reminder to check it after a long weekend, and I busied myself with other things.

Experiment 1 results

When the dust settled out, the bill reflected that there were 100.000000270 gigabytes of inbound data transfer. Someone at AWS is going to lose their freaking mind over those 270 bytes, but because inbound is free, that is not my problem to deal with.

Outbound data transfer showed exactly 1 kilobyte of data egressing. Were it not in the free tier, I would absolutely be opening a case demanding my 11 millionths of a penny back in the form of a credit.

What this demonstrates is that AWS does what it says on the tin: You’re metered on the volume of the data you send it, not the protocol overhead that hits.

At least, so long as you use S3.

Experiment 2: Amazon EC2

If I run an EC2 instance and get it to just sit there and accept traffic that I send it, data transfer is going to work slightly differently. Most traffic these days is encrypted in transit; this means that AWS has no visibility into what the payload of any given packet is. I would expect AWS to charge me for the overhead of the acknowledgement packets.

For this one, I spun up an EC2 instance in the Bahrain region. I didn’t SSH into it at all; I used AWS Systems Manager Session Manager, which is explicitly called out as costing nothing when used to connect to EC2 instances, so I used it to log into the node and set up a netcat listener.

Then I sent the same 100 1GB files via netcat in a loop from my desktop, validated there were no errors, took a three-day weekend, and checked the billing system.

Experiment 2 results

Running an EC2 instance had a bit more interesting results, some of which I don’t fully understand.

The AWS bill reflected 103.639GB of inbound data transfer. Clearly, traffic protocol overhead is measured was in ways that it’s clearly not when working with S3. The 3.6% is within most reasonable levels of tolerance, but it’s good to know.

I was billed for 1.25GB of data transfer out during this time period. That feels directionally correct for the relative size of acknowledgement packets.

But here’s where it gets strange.

I can get detailed metrics in CloudWatch out of the network interface on the EC2 instance. CloudWatch shows that the instance saw 112GB of data ingress during the testing time frame. I could see the Systems Manager agent tossing a few megabytes back and forth for half a day as it checks in with various things, but not over 10GB of traffic in a day. And the CloudWatch metrics show that the instance emitted 1.71GB of traffic during the test window, while the billing system swears it only saw 1.25GB.

My highly scientific takeaways on AWS data ingress pricing

From my two experiments with data transfer, we can surmise a few things.

First, AWS’s CloudWatch and Billing teams should probably have a group lunch and introduce themselves to one another; I bet they’d have lots to talk about. Plus, the haggling over how much the tip should be would be absolutely legendary.

Second, AWS basically does what I’d expect it to. None of the data volumes I saw were cause for alarm, and AWS isn’t being creepy and deeply inspecting your traffic in the name of billing.

And finally, every customer who pays zero attention to this aspect of data transfer pricing is absolutely correct to ignore it — but now I spent a whole bunch of time proving it.