I’ve been beating Azure up a fair bit lately, which is no great surprise to anyone who’s a regular reader of this publication. A friend of mine mentioned over drinks that she thinks of Azure as “the Boomer cloud,” at which point I spat my own drink out and immediately knew what I had to do:

Build something on Google Cloud Functions. To the Google Cloud console I go!

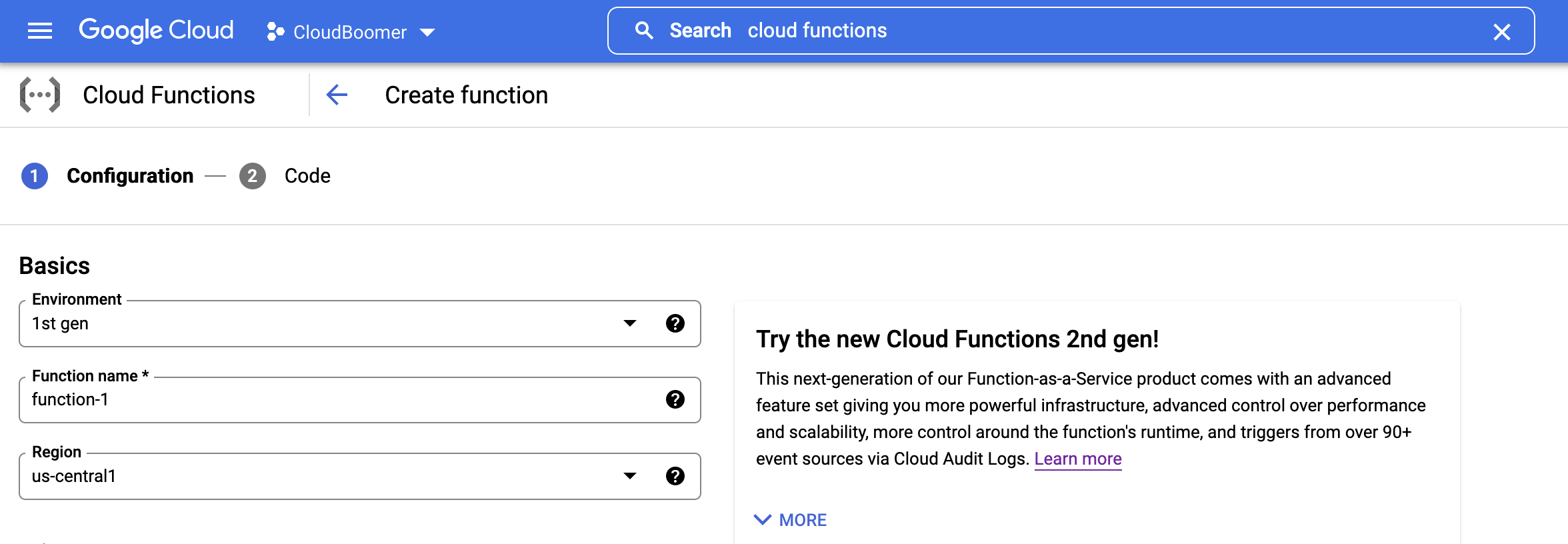

I think of Google Cloud Functions more or less as “AWS Lambda with Google branding.” That’s my baseline starting point. Unlike AWS, which hurls you into a morass of options that assumes you know what you’re doing, the wizard that Google Cloud offers is pretty straightforward. It starts by asking whether I want to use the 1st generation or 2nd generation function options.

A quick perusal of the differences shows me that this is pretty typical for a Google offering; generation 1 is deprecated while generation 2 is in beta. Given that I’m a sysadmin historically, I bias for “old and busted” over “new hotness,” so generation 1 it is.

Next, it asks me what to call it (which is easy since I’m not about to delegate naming anything to a cloud provider based solely upon its track record of screwing that particular thing up). It also asks me which region to put this in, which I absolutely do not care about in the slightest, so I accept the default on the theory that they wouldn’t suggest something currently on fire.

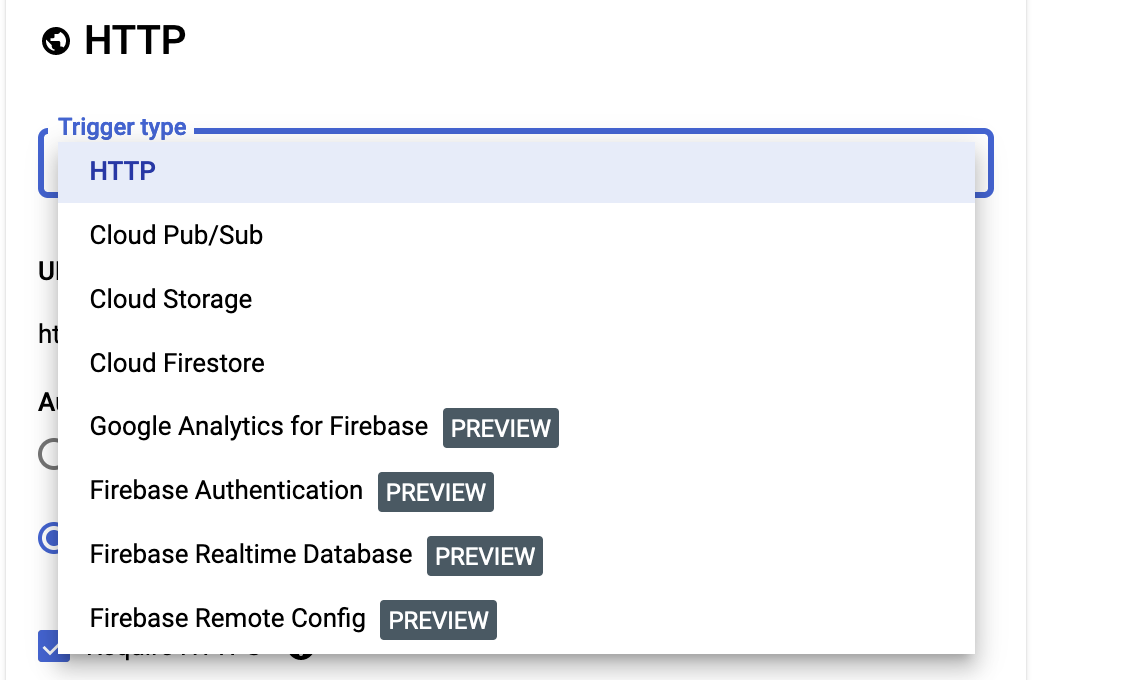

The next thing it cares about is the trigger for the function invocation. I’m met with eight potential options, four of which are marked “PREVIEW” in the drop-down menu.

At this point, I want a function that’s going to fire off every five minutes or so, and none of the various options presented seem to grant that. A bit of googling shows that Google has taken a page from AWS’s playbook on this: The right approach is to set up a job in Google Cloud Scheduler to fire every five minutes. It will then toss a message onto a Google Pub/Sub queue, which can be consumed as a Cloud Function trigger.

I admit, I cringed at this. AWS has taught me that whenever you have to cross service team boundaries like this, the experience is going to absolutely suck for you. I was therefore thrilled to learn that apparently Google doesn’t have internal policies against one service team talking to another service team every once in a while, and all of this took a few clicks in the console to set up.

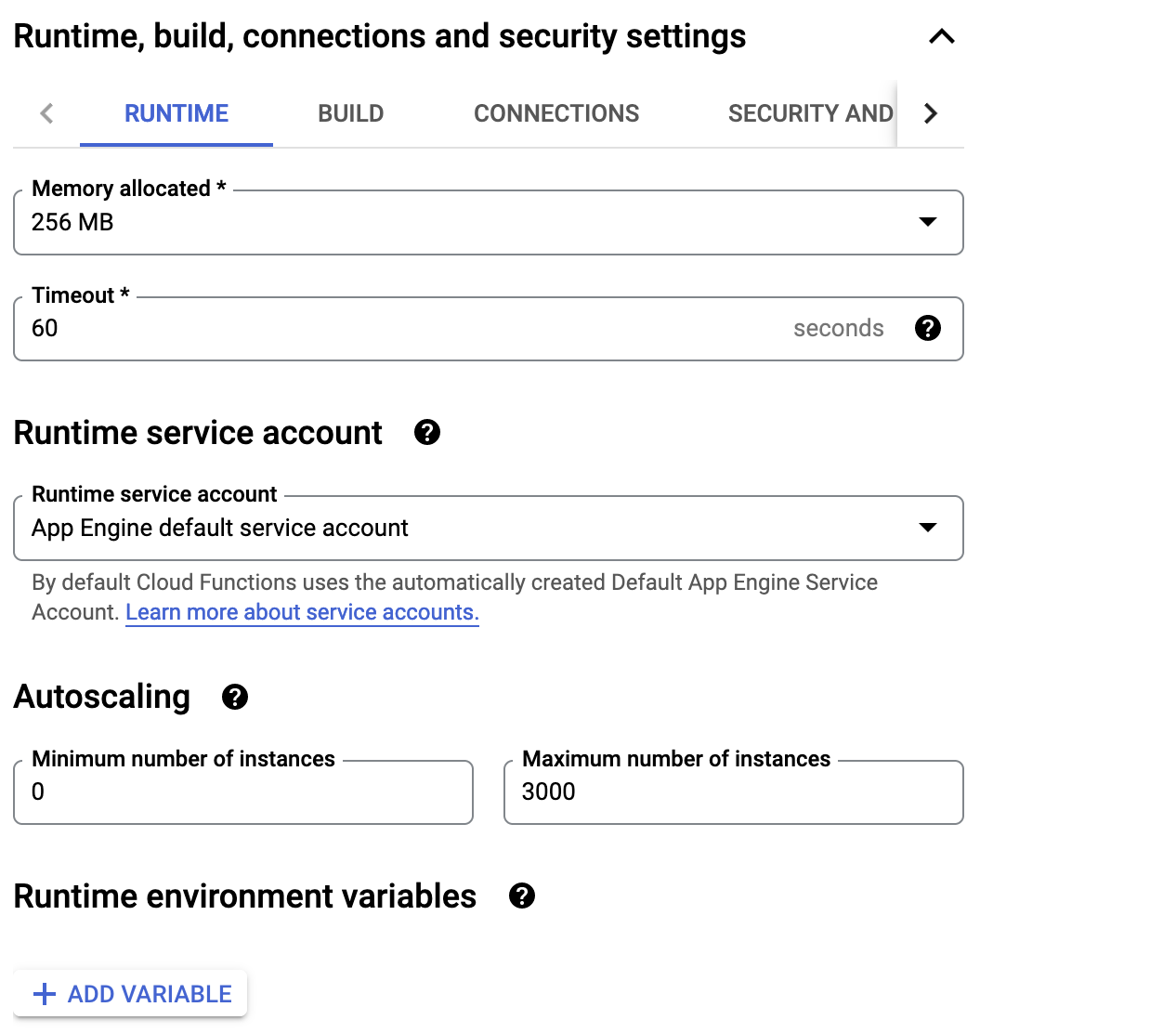

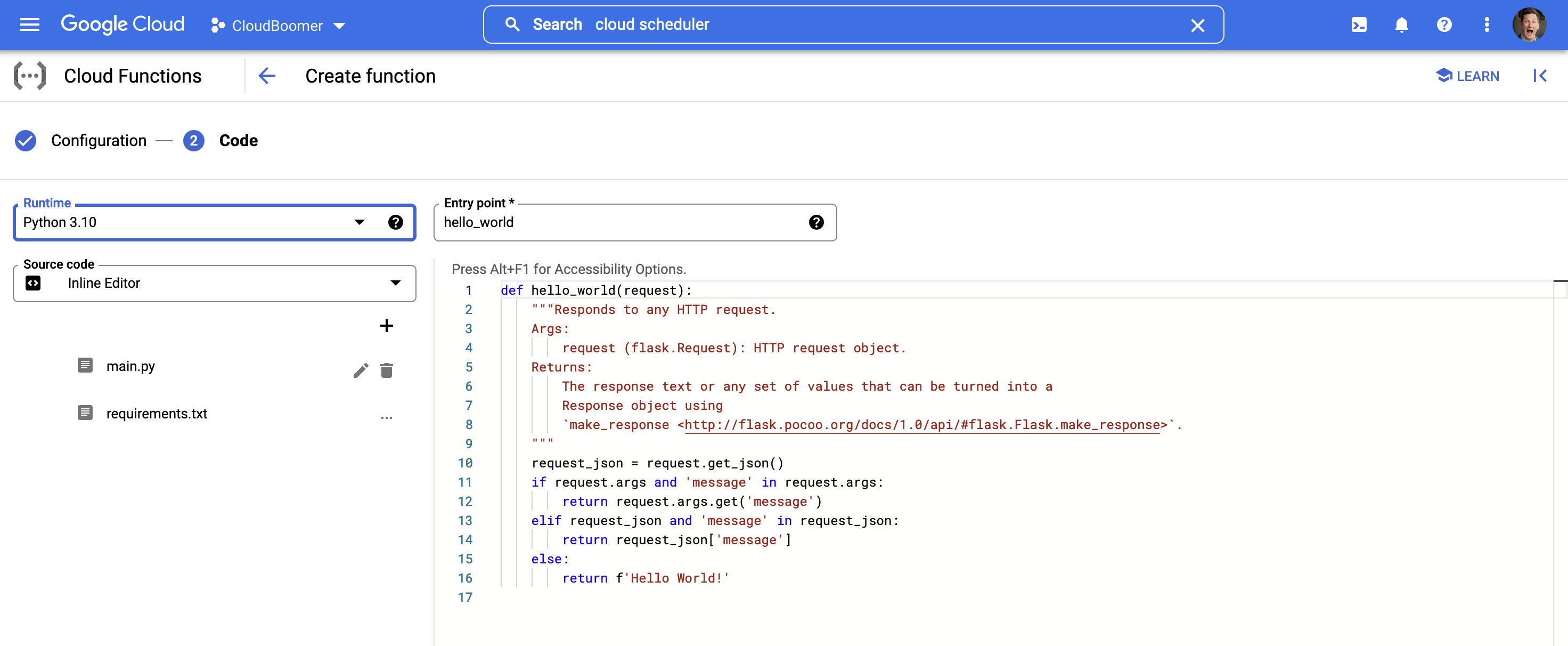

I accept the defaults for the rest of this and move on to the code portion. I’m greeted immediately by a boilerplate skeleton of a function and a dropdown of which language runtime I want to use.

There are a sarcastic number of options, so I went with the Python 3.10 option.

At this point, the infrastructure is basically set; it’s time for me to write some crappy Python to make all of this do something useful.

That didn’t take very long to bang out, because what I want is relatively simple. Whenever Microsoft Azure’s Twitter account tweets something that isn’t a retweet or a reply, I want the function to trigger my bot to quote-tweet Azure’s tweet in all caps along with the kind of commentary that you’d generally expect from what appears to be Azure’s target market.

I stored the Twitter API credentials in the Google Cloud Function’s environment variables section, but then I was left with a problem: Once this horrible bot has tweeted about an Azure tweet, I don’t want it to tweet that again. Where do I store the single piece of state for this entire application, the ID of the last tweet it saw?

This is a deceptively complex problem, and none of the cloud providers have a differentiated answer for this. Every option I explore requires at least one of two things: I either have to set up a whole means of accessing a different subsystem to retrieve the tweet ID, and/or I have to store API credentials for a third-party service to hold that data itself.

One horrible idea that occurs to me is to use a second Twitter account as a database and simply have it tweet out the ID in question. After all, I already have the Tweepy libraries integrated. I dismissed this idea as nearly as deranged as using Route 53 as a database and opted instead for Google’s Cloud Firestore option. This is a NoSQL document database that does an awful lot of stuff I really could not care less about, but it also takes a key and returns a value — which is all I really want.

The shining jewel here is that because both Firestore and my Cloud Function are in the same Google Cloud project, by default the security permissions just worked without me having to strike a deal with the devil. I wasn’t expecting that and am absolutely enchanted by the experience.

The unsolved problem as of this writing remains that I haven’t gotten Google Cloud Repositories to properly sync from my GitHub repository correctly, so I’m reduced to copying and pasting code in like some kind of monster from 1998.

But all things considered, it was a delightful development experience that I’d absolutely recommend to someone who’s starting out.

And if you care about what Azure is doing, you should absolutely keep up with the antics of my @CloudBoomer bot account which, if you’ve gotten this far with my nonsense, will no doubt tickle you.